Difference between revisions of "Evaluate NERSchemas"

RRodrigues (talk | contribs) |

Anote2Wiki (talk | contribs) (→Result) |

||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

== Operation == | == Operation == | ||

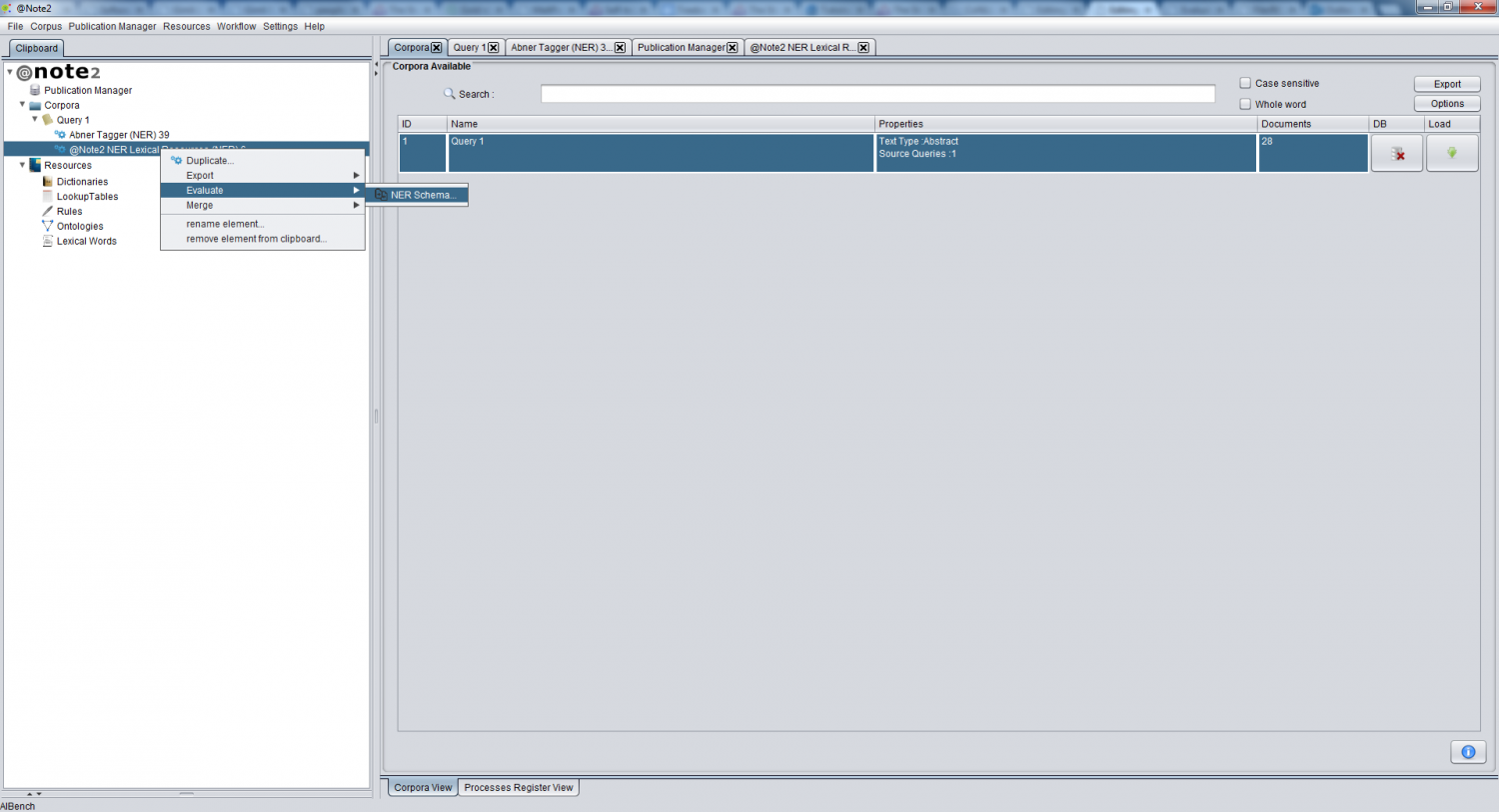

| − | + | You can apply an evaluation operation comparing two NERSchemas to calculate the annotation recall, precision and f-score between a NERSchema taken as a gold standard (typically taken from a corpus with manual annotations) and a NERSchema to compare (typically from running a specific algorithm or pipeline). | |

| + | To start the evaluation process, right click on the NERSchema Datatype to be selected as gold standard and select "Evaluate -> NER Schema". | ||

| + | |||

[[File:NERSchema Evaluate.png|center|1500px]] | [[File:NERSchema Evaluate.png|center|1500px]] | ||

| + | |||

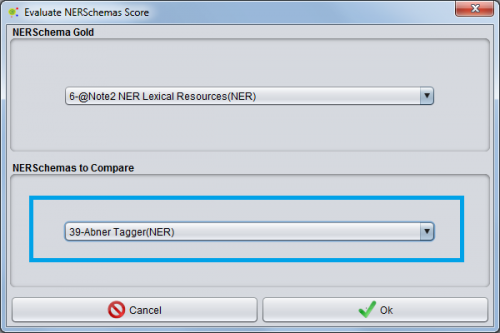

== Select NERSchema to compare == | == Select NERSchema to compare == | ||

| − | A GUI will be launched to choose NERSchema Datatype (in blue) to be compared with the | + | A GUI will be launched to choose the NERSchema Datatype (in blue) to be compared with the gold standard selected on the clipboard. |

| + | |||

[[File:NERSchema Evaluate GUI.png|center|500px]] | [[File:NERSchema Evaluate GUI.png|center|500px]] | ||

| − | NOTES: Only | + | NOTES: Only NERSchemas with the same Normalization process as the one NERSchema gold standard will appear. |

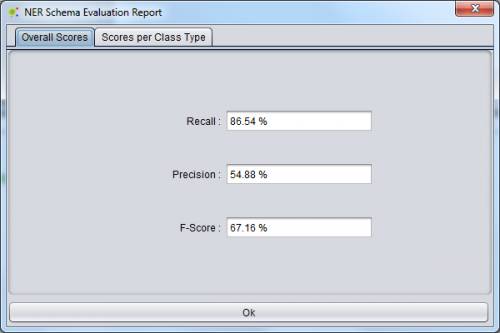

== Result == | == Result == | ||

| − | + | An NER schema evaluation report will appear after the evaluation process with two tabs related to overall scores and scores per class type. | |

| + | |||

| + | In the overall scores tab, the annotation recall, precision and f-score measures are presented for all annotated entities. | ||

| + | |||

| + | |||

| + | [[File:NERchema Evaluate Result.png|center|500px]] | ||

| + | |||

| + | |||

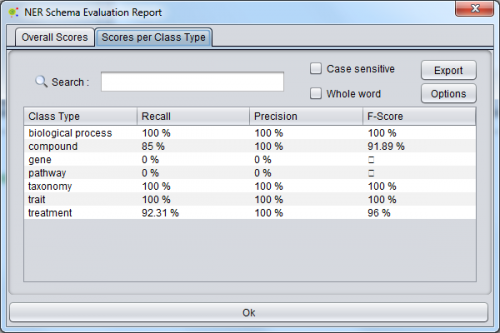

| + | In the scores per class type, the annotation recall, precision and f-score measures are presented for each annotated entity class. | ||

| + | |||

| − | [[File:RESchema Evaluate Result.png|center| | + | [[File:RESchema Evaluate Result b.png|center|500px]] |

Latest revision as of 17:32, 16 January 2015

Operation

You can apply an evaluation operation comparing two NERSchemas to calculate the annotation recall, precision and f-score between a NERSchema taken as a gold standard (typically taken from a corpus with manual annotations) and a NERSchema to compare (typically from running a specific algorithm or pipeline). To start the evaluation process, right click on the NERSchema Datatype to be selected as gold standard and select "Evaluate -> NER Schema".

Select NERSchema to compare

A GUI will be launched to choose the NERSchema Datatype (in blue) to be compared with the gold standard selected on the clipboard.

NOTES: Only NERSchemas with the same Normalization process as the one NERSchema gold standard will appear.

Result

An NER schema evaluation report will appear after the evaluation process with two tabs related to overall scores and scores per class type.

In the overall scores tab, the annotation recall, precision and f-score measures are presented for all annotated entities.

In the scores per class type, the annotation recall, precision and f-score measures are presented for each annotated entity class.